In supporting systems it is quite difficult for all staff to be across all the support types and queries that can come from each system. We in the Learning Technologies Unit have been undertaking some experiments where our goal is better knowledge management through technology.

The problem

The idea of knowledge base (KB) articles has been around for many years and they are used widely within CSU. These are where a user or supporter is guided through an investigative process. The missing part of the KB model is which KB is appropriate at any given time and what additional KBs are needed when these don’t resolve the query. The general model up until now has been based on search queries.

Our approach

This problem, prompted us to pursue the creation of a knowledge tree. From an initial symptom, a person could be guided through a series of branching questions from their initial query to a resolution. A major benefit is that a user would not require high level knowledge of the area of enquiry.

Several technologies were investigated to potentially deliver our vision of an interactive KB. One such option was the use of chatbots. Ultimately we went in 2 different directions. A website of drill down clickable links to solve queries as well as chatbot.

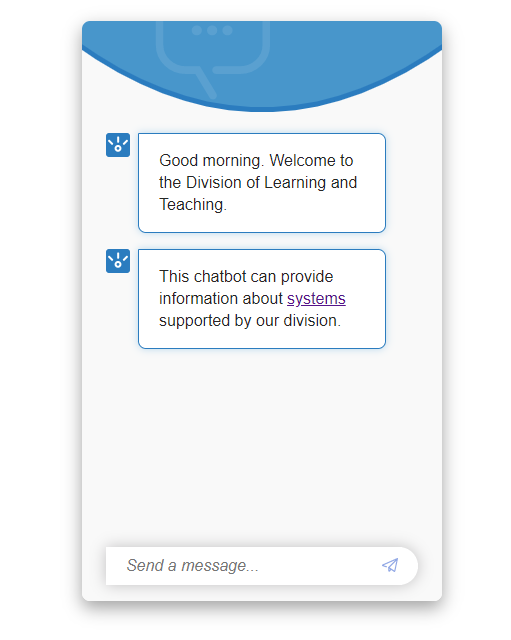

Through our research it was apparent that chatbots are possibly effective at providing information to customers at the tier 0 self-help support level, reducing the amount of enquiries made to support staff. Since a large portion of our support work is filling a customer’s knowledge gap by pointing them to existing procedures, the chatbot seemed ideal to providing a way customers could ask a question whenever using one of our services.

We wish to have an ultimate situation where a chatbot could be asked – and answer – many questions related to the online services we support. Theoretically a chatbot should be able to ask additional questions to ensure that most avenues have been followed before a support staff member needs to intervene. This is particularly helpful if you can build in rare issues and technologies that aren’t used constantly.

What is nice with a chatbot is that it can tie in with existing procedures and troubleshooting and automate some of this work for an end user when they need assistance by asking exploratory questions.

What we have found is that there are many ways that the logic of question and answer (decision trees) can take. Effectively you need to unpack how an interaction with a user will play out, regardless of the technology a chatbot uses.

We have used the IBM Watson Assistant framework as it was the most technically accessible option at the time and we employed two different models for conceptual creation of a chatbot.

Model 1

In our initial approach to creating a chatbot within the constraints of the technology and how it constructs its logic paths.

The chatbot is governed by three main inputs; Intents, Entities and Dialog.

1. Intents are how you detect what an end-user wants to do. This is usually a repository of potential questions users will ask. e.g. “How do I download an assignment?”

2. Entities are labels you apply, like keywords for the chatbot to recognise.

3. Dialog is the core of the chatbot where the developer creates conversation trees and responses based on the Intents and Entities.

The first approach was to create direct responses to any given Intent. The interaction was a simple one where the user would ask a question, then the chatbot would analyse and match this to an Intent. The pre-defined answer to this Intent would be displayed.

This works fine for a single system, or a group of simple systems. But once you add complexity, such as the variety and overlapping systems which make up those supported by DLT, the line between Intents becomes blurred and it’s difficult to get the answer you want from the chatbot without asking a very specific question.

Model 2

Our second approach was designed to combat this and allow Intents to be more generalised. In this version we employed Entities to work as labels for systems, roles and other keywords associated with technologies. Intents were reduced to simply identify what the person wants to do with that Entity. So, with a question like “How do I download an assignment?” the chatbot identifies ‘download’ as an Intent and ‘assignment’ as an Entity. Doing this provided a more economical way to create Intents and Dialogs and gave us the flexibility to easily insert more systems into an existing structure.

The chatbot technology we have used is essentially a conduit to test an idea. Each approach has benefits and flaws. One key piece of learning with this set of experiments is that when starting out with a chatbot logic, planning is important and you will most likely scrap an idea as you learn more about the requirements and capability of a tool. There is more to the underlying technology which can be used to further expand upon what it can do for us.

Next Steps

Have a try for yourself at the below address. The most fleshed out example is that of EASTS help.

https://assistant-chat-us-south.watsonplatform.net/web/public/06e4c23d-62f5-4b89-aeb6-f9875ece58f9

The nice thing with this particular technology is that it can be refined based on how those use it. So if you, for example, used the chatbot link above and were given an incorrect answer to a question, the developer can observe and refine it with a few clicks so the correct answer is given going forward.

Any feedback on the above would be greatly appreciated, as we are just experimenting at this stage with a view to moving to a university supported platform when that time comes.

Contributed by Matt Deans mdeans@csu.edu.au and

Sam Parker sparker@csu.edu.au

Learning Technologies Unit, Division of Learning and Teaching